Syncopated Science: My Rhythms of Research and Self Development

Part I: An Upbringing Long Ago – My Times of Education

Reflecting on my days at Vocational High School, I found myself amidst a community of hackers. At that time, HTTPS had just started becoming widespread, and tools like FireSheep could easily hijack sessions simply by being on the same local Wi-Fi. My peers were the quintessential teenage hackers from New Jersey, and I absorbed a tremendous amount from them. This immersion in a tech-savvy environment paved the way for my career in cybersecurity immediately after completing my degree. As I was entering my final semester, I landed a job as a cybersecurity analyst at a financial firm, a role for which my ability to communicate effectively was a significant asset. This skill helped me quickly advance to my current position.

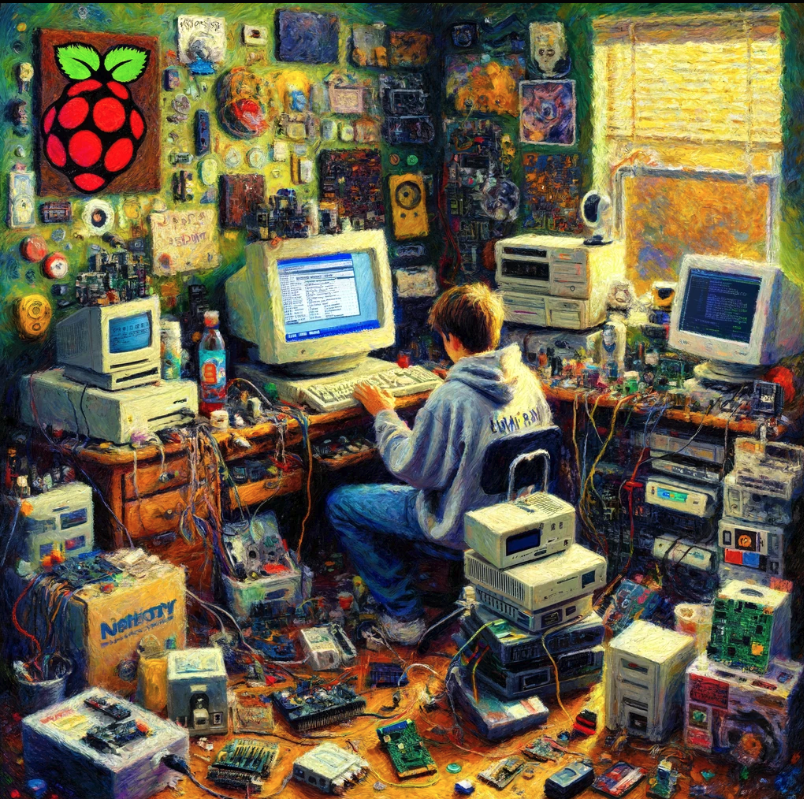

Throughout this period, I experienced intense bursts of creativity and productivity, which some might describe as manic episodes during my college years. During these phases, I would spend weeks engrossed in coding, building systems and applications without any direct need, purely as a hobby. I began collecting Raspberry Pis, setting up databases, and learning web development frameworks like Ruby on Rails and Django for Python. These tools and skills, initially pursued out of sheer interest, would later become invaluable as I ventured into building my own company years later. This journey from a vocational high school environment filled with early hackers to becoming a cybersecurity professional and eventually an entrepreneur, highlights the unpredictable yet profoundly influential path my curiosity and passion for technology have taken me on.

Reflecting on those formative years, I recall the thrill of encountering cracked versions of Windows 2003 Server being passed around, and the strategic regression to Windows 95 by some, exploiting its lax security on file system metadata to obscure the true origins of files. These exploits, while ethically dubious, were a part of the landscape that fueled my curiosity and understanding of computer systems. I treaded these waters with the hope of not crossing any significant ethical lines, reminiscent of the innocent mischief of my childhood. This habit of breaking to fix something started at around eight years old, I learned from my cousin Angelo about the hack of making a floppy disk writable by punching a hole in it. Fueled by curiosity, I eagerly applied this to my Berenstain Bears game, a far cry from the complexities of cyber security but indicative of my early fascination with technology.

The high school period was notably enriched by the IT essentials segment, where my engagement with technology became more hands-on. We crafted chips and utilized them in creating devices like shock pens, microphones, and voice changers – gadgets that today could be likened to educational toy kits found at Target, designed to spark a child's interest in coding and building. This engagement laid a foundational appreciation for the hands-on aspect of technology, seamlessly leading into my explorations in robotics. I built touch screen with simple low MP webcams and a gutted computer scanner. Robots that could follow lines on the ground and detect collisions, a primitive iteration of what we now call a Roomba. Observing these robots navigate and "learn" their environment, I pondered the nature of this learning. Was it true learning, or merely a recording of coordinates to be avoided in the future? The tangible aspect of measuring progress by wheel turns and distances crossed suggested it was more about programmed avoidance than actual learning. Yet, this early exposure to the basics of machine behavior and artificial intelligence concepts was a critical stepping stone in my journey through technology.

Part II: University, Biochem, Physics and Tech

Upon entering the collegiate world, I encountered two significant challenges. Initially, I applied to be a Physics teacher, which would have been difficult given the competitive market for secondary school teaching jobs. Then I decided to shift freshman year and I pursued pre-med, aspiring to become a neurosurgeon, without realizing that by my junior year, my squeamishness would prevent me from continuing in this path. My focus was on cellular biology and neuroscience, also known as CBN. In this field, I learned about cognition, the brain's interaction with the nervous system, its various functions, and intriguing concepts resulting from observing stroke-victim outcomes.

Fascinated by the complexities of the human brain, I was particularly intrigued by conditions like Broca's aphasia, a result of damage to Broca's area, responsible for speech production. Patients with Broca's aphasia understand language but struggle to speak or write fluently, often producing speech that is meaningful yet halting and laborious. Similarly, Wernicke's aphasia arises from damage to Wernicke's area, crucial for language comprehension. Those affected can produce fluent speech, but it often lacks meaning or is jumbled, making it difficult for them to understand spoken or written language. These conditions, highlighting the brain's fragility and resilience, underscored the profound impacts of strokes and injuries, sometimes leading to remarkable changes in abilities or perceptions.

Some individuals, after experiencing strokes in certain brain areas, exhibited almost savant-like abilities. For instance, there's the well-known case of a man who memorized an extensive number of pi digits and multiple languages, attributed to his high-functioning Asperger's syndrome.

Among the myriad of extraordinary cases that captivated me was the story of a man who, due to cerebral blindness caused by a malfunction in his brain's neuronal networks, discovered an unconventional path to regain his sight. Cerebral blindness differs from ocular blindness as it results from brain damage rather than issues with the eyes themselves. This individual found that playing the piano, an activity he was deeply passionate about, acted as a therapeutic mechanism to rewire his brain's damaged networks. The intricate coordination of reading music, memorizing pieces, and the tactile response of pressing the keys facilitated a kind of neuroplasticity that eventually restored his vision. It was as though the music served as a bridge, reconnecting the disrupted pathways in his brain, illustrating the incredible capacity of the human brain to heal and adapt in ways that sometimes border on the miraculous.

These phenomena deeply intrigued me, fueling my enthusiasm to join a friend named Jason in exploring natural language processing and the state of machine learning, even though its roots can be traced back to chatbots in the late 1980s. Our initial experiments focused on labeling and tokenizing language, and training language dictionaries to approximate realistic speech. However, the technology was not yet advanced enough to productize our ideas. The best we could offer was creating models to assist others in implementing this technology for their unique needs, rather than developing a standalone product.

Second, In a shock to my family, my mother suffered a stroke, which left her paralyzed and wheelchair-bound. After some time stocking shelves I returned to school, completing a degree in applied science and mathematics. This journey in it’s entirety took six years due to a pivot in my studies, necessitating a deeper dive into mathematics than my prior focus on cellular biology, genetics, and neuroscience required. This exploration proved to be one of the most enriching knowledge pursuits I've undertaken. The deeper I delved into the sciences, the more I realized our collective understanding was minimal. From quantum mechanical theory to historical scientific debates, and discussions with Dr. Layman, one of the original inventors of Gorilla Glass used in today's iPhones, to reading Richard Feynman's works, my curiosity knew no bounds. A quote that resonated with me, though possibly paraphrased, was from Albert Einstein, suggesting that humans are limited only by the language we've created to define the things we don't yet understand. This insight has profoundly influenced my perspective on the pursuit of knowledge.

Part III: Starting on NLP & Watson

The reason behind me my writing, is the story of my journey with artificial intelligence, machine learning, language models, and natural language processing. This journey began in an unexpected place: my old band. I was the frontman of a funk jazz band that themed its music around pop, including the likes of Maroon 5. Our group included a soulful keyboard player, a classically trained cellist, an electric guitarist, a jazz drummer, and myself, writing lyrics and performing in front of audiences. Our largest show was at NYU Skirball, attended by several hundred people.

One of my bandmates' roommates, whom I introduced in the last section breifly, Jason, was an IT major with a focus on business at Rutgers University. Jason and I hit it off, often engaging in philosophical discussions about the universe, my budding faith, and the direction the world was heading.

One day, Jason approached me with a proposal to start a company. We named it Prolific, a moniker that might seem more fitting for a generative AI company today than it did back then. At that time, I had taught myself Python, having already gained experience with Operating systems and Networking, having attended Cisco Certified Networking Academy and IT Essentials as I discussed earlier. Jason, with his college education and technological background, taught me a great deal about setting up infrastructure and the flexibility of cloud services.

Note, this was over 10 years ago, when many of the newer concepts were still emerging. We were transitioning from a world dominated by applications and local servers to one where Software as a Service (SaaS) began to flourish.

[Side Note: This was around the time Watson became popular, and sentiment analysis was starting to make waves. Watson, despite its merits, suffered from poor marketing—a fact I know through my father, who spent over 25 years at IBM and shared insights about its internal workings after leaving the company. Moreover, when I search for my mother's name on Google, she is still recognized for her role in the invention of the 802.11 standard for wireless. She wasn't an engineer but facilitated global conversations among the experts who designed the Wi-Fi standards we use today. Their testament a path that led me to where I found myself seeking.]

After my experience with Prolific, I felt a strong desire to embark on a new venture. Leveraging the resources of my corporate environment, I decided to further my education in technology, a field that was becoming increasingly appealing to me, given my extensive background in the hard sciences and mathematics. This marked a significant shift from my initial aspiration to become a physics teacher, an ambition inspired by a physics teacher who had profoundly influenced me during my high school years. This experience underscored the immense value of mentorship and the ability of an inspiring educator to ignite passion in their students—a principle I've cherished throughout my career.

Part IV: Diving into the Tech

At Harvard, my academic pursuits deepened, particularly in the realms of natural language processing (NLP) and big data. I enrolled in four NLP courses and several others focused on databases, immersing myself in the nuances of various database types, including the advantages of NoSQL and edge graphing. I also explored the workings of traditional relational databases, the management of big data, and the evolution of Lucene into Elastic, leading to developments like Sumo Logic. These studies revealed the layered nature of query languages and their applications in enhancing user experiences, akin to the optimizations seen in Facebook's Spark database for speed and efficiency.

Under the guidance of Richard Joltes, an original builder of Watson’s backend processing, my understanding of NLP expanded to include far more on tokenization, data cleaning, and the preparation of data for deep learning models using tools like Keras and TensorFlow. One fascinating aspect of my research was the exploration of generative neural networks, exemplified by experiments that inverted the learning process to create new sounds, such as crackling flames or running water, from existing recordings. These experiments, although sometimes yielding unexpected results like the sound of an inebriated person ascending stairs, highlighted the importance of considering timing and rhythm in model training.

Recurrent Neural Networks (RNNs) have ushered in a fascinating era of possibilities in the generation and manipulation many things, but one stuck out to me in sound, particularly through the innovative approach of model reversal. RNNs, with their capacity to process sequences of data, have been instrumental in understanding and generating audio sequences. By training an RNN on sound data, such as musical compositions or environmental sounds, it learns to predict the next sample in a sequence, creating a model that can generate sound by extrapolating from learned patterns. The intriguing twist comes with the reversal of these models. By training a model on sound data in a forward sequence and then inverting the model's operation, it becomes possible to generate new sound sequences that retain the essence of the original data but produce entirely new auditory experiences. This reversed approach has not only expanded our capabilities in sound generation but has also provided novel insights into the nature of sound or neural networks themselves and how we might manipulate its properties in creative and unforeseen ways. As a musician and artist, I found this kind of thing exciting and novel. The very fact that reversing a model used for detection and classification was astounding and applicable to me.

My time at MIT and Harvard was filled with groundbreaking discoveries and innovations that inspired me to pursue my own projects. Starting in 2019, I focused on the challenges of relational databases, data normalization, and transformation. This journey led to a significant breakthrough in my theories on normalizing data prior to database ingestion, culminating in a capstone project at Harvard University. This project, centered on processing data in flight that could be collected in an agnostic and unstructured manner, marked a pivotal moment in my academic and professional development. It was the culmination of my studies and experiences, resulting in my graduation with a master's degree from Harvard University.

Part V: The Contemporary State & SkipFlo Inc

In 2021, after officially incorporating my business, SkipFlo Inc., I delved deeper into the exploration of data models, particularly focusing on the tokenization of data. I experimented with breaking down data into syllables or smaller segments and questioned the efficacy of removing stop words, a practice I had previously learned to value. My explorations ventured far from the methodologies of my Harvard project, leading me to develop a conceptual homotypic model. This model balanced the agility of relational database speeds with the nuanced relationships typical of graph databases, maintaining computational efficiency without sacrificing the integrity of data connections.

My experience with TensorFlow and other tools at Harvard, such as utilizing Manhattan distance to connect actors through the "Six Degrees of Kevin Bacon" or developing an AI that could nearly always win Minesweeper, informed these developments. I applied similar innovative thinking to create tools ranging from simple tic-tac-toe games to sophisticated database models. My goal was to stay abreast of the latest developments on GitHub and other platforms, building out language models specifically designed for IT Configuration Management Databases (CMDBs). This focus stemmed from my observations of the chaotic state of existing CMDBs and the inefficiencies in discovery scans, which seldom captured everything in a timely manner. My aim was to integrate disparate sources and remain tool agnostic, creating a product that excited me, even though it proved challenging to market, particularly within my network in cybersecurity, compliance, and executive leadership.

Convincing executives to grasp the nuances of relational databases, let alone my enhancements to them, was a daunting task. Despite these challenges, it emboldened an already bold goal upon graduating ungrad: to either successfully launch my own company or become a CISO by the age of 35. I was driven by the belief in the critical need for technical knowledge in leadership positions. Too often, I witnessed leaders make ill-advised decisions on tool acquisitions based on relationships rather than a deep understanding of their operational environments. My journey was a commitment to bridging the gap between technical expertise and executive decision-making, underscoring the importance of informed leadership in the rapidly evolving landscape of technology and cybersecurity.

During this period of exploration and innovation, my career wasn't solely focused on artificial intelligence. It also spanned architecture and development, often finding me in net-zero environments where I played pivotal roles as either the first experienced staff member or the one tasked with spearheading new departments and nurturing talent. Amidst these responsibilities, I fulfilled my early aspiration of teaching by volunteering at a local Catholic high school during COVID, offering physics lessons to students seeking an additional layer of science education.

At Netskope, my role as a principal security architect had me deeply involved in designing detection engineering and responses for FedRAMP environments, eventually transitioning to FedRAMP high, and DoD level applications. This experience granted me exposure to the FBI's InfraGard solution and malware intelligence sharing platforms, enriching the cybersecurity backbone of my knowledge and future models post-Netskope. While I didn't directly apply these tools, the understanding of systems like STIX/TAXII and accessing the National Vulnerability Database were crucial in the development of my organizational goals.

Reflecting on this journey, my transformation to Faith, the mentorship I've cherished, and the opportunities I've been blessed with have been instrumental in leading me to where I stand today. I'm compelled to share this narrative against the backdrop of the current hype surrounding generative AI, which has become as ubiquitously discussed as cryptocurrency once was. Everyone seems to be claiming their involvement with generative AI, yet there's a clear disparity between mentioning it and utilizing it effectively. I observe a vast majority lacking a deep understanding of its workings, which isn't to undermine the complexity of concepts like grokking but to emphasize the need for cautious recognition of expertise in this rapidly evolving field.

Despite the enthusiasm for generative AI, the discussion often feels superficial, with many jumping on the bandwagon without a firm grasp of the underlying technology. When I engage in conversations about tokenization and Large Language Models (LLMs)—topics I've been deeply involved with for years—I'm sometimes met with skepticism, as if my insights were mere sales pitches. This perception is disheartening, yet I maintain that anyone striving to comprehend these technologies is moving in the right direction. It's crucial, now more than ever, to foster an environment where genuine curiosity and effort to understand the intricacies of AI and its applications are valued, steering clear of quick judgments and embracing a more nuanced appreciation of the technology's potential and its pioneers.

Thanks for Reading, I appreciate if anyone got this far, looking forward to an amazing future with all the people I meet in this wild journey! Feeling Blessed to know all of you.

-Chris Yacone